The Meta Robots Tag Checker helps you analyze and validate robots meta tags, X-Robots-Tag headers, and canonical directives on any page or bulk URLs. It detects indexing conflicts, helps prevent deindexing mistakes, and ensures your website is search engine–friendly.

What the Meta Robots Tag Checker Does

The Meta Robots Tag Checker scans web pages for meta robots directives and HTTP X-Robots-Tag headers. It identifies if a page is set to noindex, nofollow, or has conflicting canonical tags that affect SEO.

This tool makes it easy for webmasters and SEOs to understand which pages are accessible to Google and which ones are blocked. It also detects accidental “noindex” errors that could prevent your pages from showing in search results, especially after migrations or CMS updates where technical settings are often overlooked.

Key Features for Accurate Robots Meta Validation

The robots meta tag checker tool combines multiple validation layers in one scan, giving a complete SEO overview.

- Meta Robots Scan: Checks for index, noindex, follow, or nofollow directives.

- X-Robots-Tag Audit: Analyzes HTTP headers returned by servers.

- Canonical Validation: Ensures canonical URLs are correctly referenced. This is especially useful when paired with a deeper canonical review using a Bulk Canonical Checker.

- Robots.txt Check: Verifies access permissions for crawlers, helping you spot conflicts between robots meta tags and your robots.txt rules.

- Conflict Detection: Highlights contradictory instructions like “noindex” and “canonical.”

- Bulk URL Support: Analyze up to 50 URLs simultaneously.

- CSV Export: Save reports for audits or SEO documentation.

For example, if your staging URLs accidentally contain noindex, the tool flags it instantly so you can fix it before Google crawls it. It’s also smart to verify that those pages return proper server responses using a Bulk HTTP Status Checker during audits.

How to Use the Meta Robots Tag Checker Tool

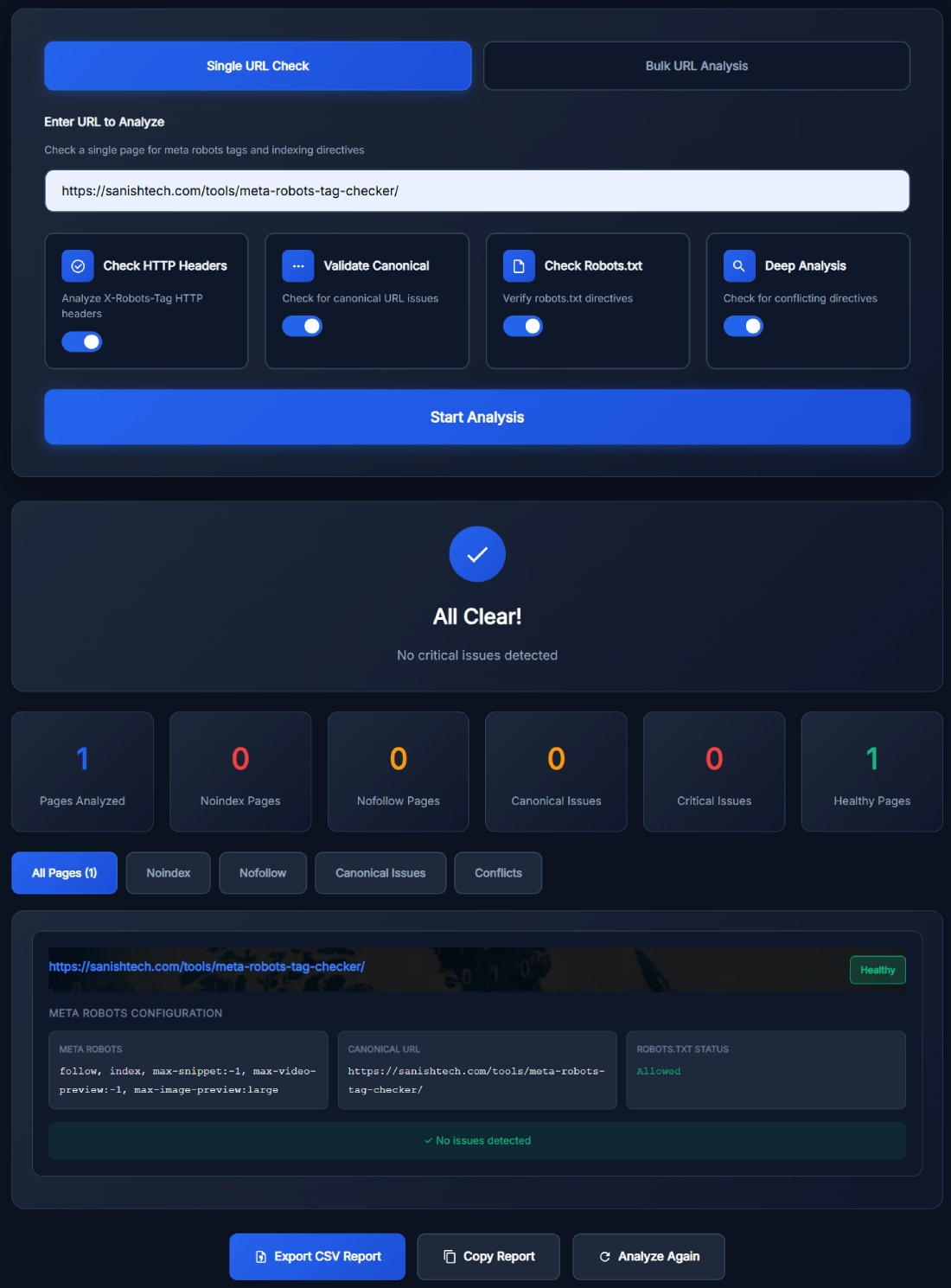

You can use the tool in single-URL or bulk-URL mode.

- Enter URL(s): Paste one or more website links.

- Select Validation Options: Enable checks for HTTP headers, canonical URLs, and robots.txt.

- Click Start Analysis: The tool begins scanning and displays results in a few seconds.

- Review Results: You’ll see each page’s robots status, canonical link, and potential conflicts.

- Export Report: Download all findings in CSV format.

Each page result includes its robots configuration, canonical URL, and crawl permissions, color-coded as Healthy, Warning, or Error. If you’re auditing large sites, exporting the data alongside URL lists from an XML Sitemap URL Extractor can speed up your workflow.

Benefits of Using the Robots Meta Tag Checker Tool

This tool saves time by automating one of the most overlooked technical SEO checks.

- Prevents Indexing Mistakes: Detects accidental noindex or disallow rules.

- Improves SEO Visibility: Ensures important pages are crawlable.

- Simplifies Auditing: Bulk validation helps analyze entire sites quickly.

- Reduces Technical Conflicts: Identifies mismatched canonical or robots settings.

- Ideal for Agencies: Perfect for pre-launch audits, migrations, and compliance checks.

Whether you’re optimizing a small blog or a large eCommerce site, it ensures your indexing directives are clean and consistent. For content-heavy sites, combining robots validation with a Bulk Meta Title & Description Checker helps keep both visibility and indexing aligned.

Real Example of Validating Meta Robots Directives

Suppose your blog has 200 articles, and 10 of them were mistakenly tagged with “noindex.” You might not notice it until rankings drop. By scanning with the Meta Robots Tag Checker, you’ll immediately see which pages have noindex or nofollow tags.

It also flags cases where canonical URLs conflict with robots directives. That means you can fix SEO errors before they hurt your site’s visibility and organic traffic.

Pro Tips for Getting the Best Results with the Meta Robots Tag Checker

- Always test after major site updates or redesigns.

- Avoid combining “noindex” with canonical tags — it confuses search engines.

- Use robots meta tags for fine control and robots.txt for global exclusions.

- Run monthly scans to maintain indexing health.

- Always double-check that important landing pages are indexable.

A few quick audits using this tool can save you from costly ranking issues later.

FAQ about Meta Robots Tag Checker

What is a meta robots tag checker?

A meta robots tag checker is a tool that analyzes a webpage’s robots meta tags and X-Robots headers. It helps you verify whether a page is indexable or blocked from search engines, ensuring that your site’s crawling and indexing settings are properly configured.

How does this robots meta tag checker tool work?

The tool scans your webpage’s source code and HTTP headers to detect directives like noindex, nofollow, or canonical conflicts. It then classifies results as valid, invalid, or conflicting, providing a quick overview of which pages can appear in search results.

Can I check multiple pages at once?

Yes, the Meta Robots Tag Checker supports bulk URL analysis. You can input up to 50 links at once, making it ideal for large website audits, SEO agencies, and webmasters managing multi-page sites.

What happens if a page has both noindex and canonical tags?

This is a conflict. A noindex tag tells Google not to index a page, while a canonical tag signals it’s the main version. Using both can confuse crawlers. The tool highlights these cases so you can remove or adjust directives accordingly.

Can I validate meta robots directives inside HTTP headers?

Yes. The tool analyzes X-Robots-Tag headers in HTTP responses, helping you catch server-level directives that affect indexing even when meta tags look correct in the HTML.

How often should I audit meta robots tags?

Audit your robots meta tags at least once a month or after major updates, migrations, or plugin changes. Frequent audits ensure no unintentional directives impact visibility in Google Search.

Can I export the meta robots audit as a CSV file?

Yes. After analysis, click “Export CSV Report” to download a structured audit summary. This file includes page URLs, robots tags, canonical data, and indexing status for easier sharing and reporting.

What is the difference between robots meta tags and robots.txt?

Robots meta tags apply to individual pages and control indexing at the page level. Robots.txt controls which parts of a website crawlers can access. Using both correctly ensures complete crawl management.

Why are robots meta tags important for SEO?

Meta robots tags guide search engines on how to handle your content — whether to index it, follow its links, or skip it. Properly configured tags ensure that search engines focus on your most valuable pages.

Does this tool also check canonical and x-robots directives together?

Yes. The Meta Robots Tag Checker cross-validates canonical and X-Robots directives to find inconsistencies. This gives you a complete, accurate view of your website’s indexing health and ensures compliance with Google’s best practices.