Free Robots.txt Generator Online

Create a custom robots.txt file to control how search engines crawl your website

The robots.txt file might look like a tiny text document, but it holds real power. It tells Google, Bing, and other bots what they can or can’t explore on your site. Using this free robots.txt generator online, you can create a customized robots.txt file in seconds — no technical headaches or manual formatting errors.

Whether you’re managing a WordPress blog, an eCommerce store, or a client’s SEO setup, this tool simplifies everything. You just choose what to allow, what to block, and hit “Generate.” The tool instantly builds a crawl-ready robots.txt file that aligns with your SEO goals. If you’re unsure whether important pages are being blocked by accident, pairing this with the Meta Robots Tag Checker can help you confirm index/noindex signals match your crawl rules.

What the Free Robots.txt Generator Online Does

Think of this tool as your site’s traffic controller. It lets you decide which parts of your website are open to search engine crawlers and which ones stay private. You don’t need to write a single line of syntax — the online robots.txt creator tool does it all for you.

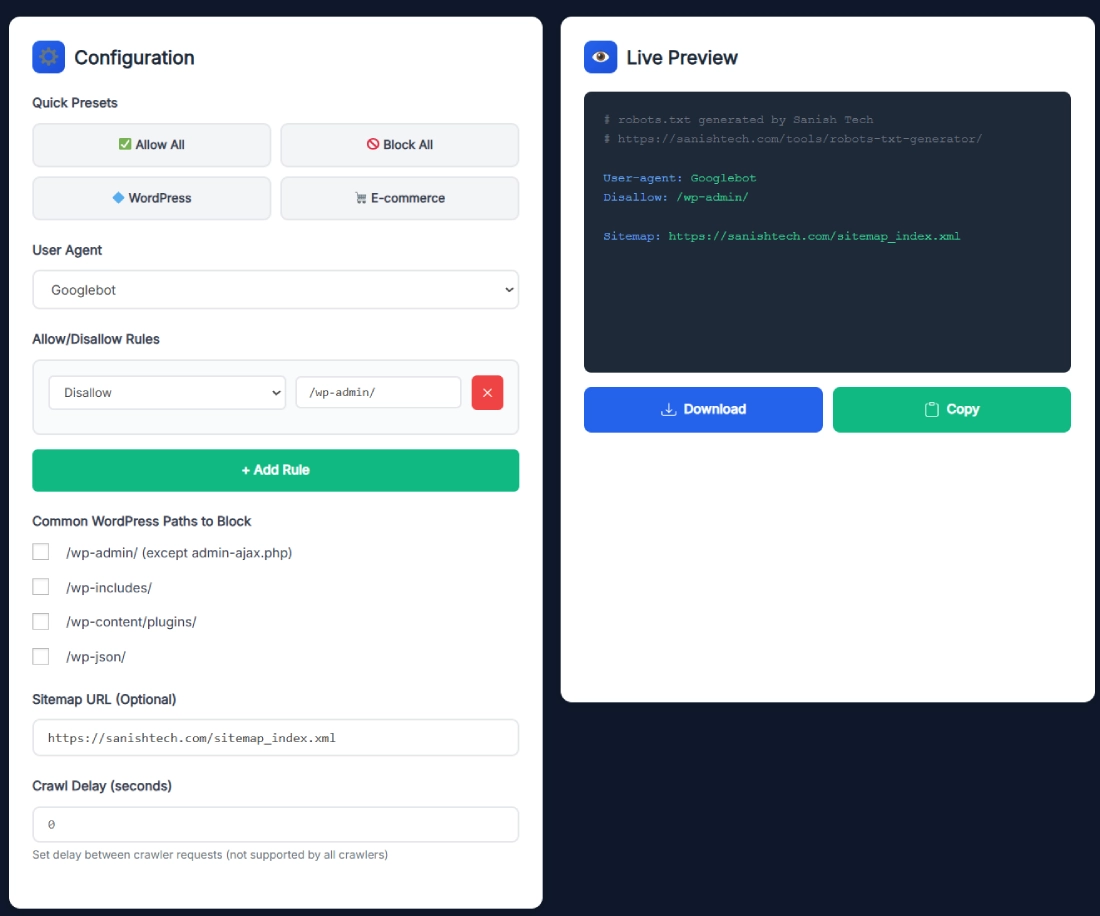

When you load the tool, you’ll see two main sections. On the left, there’s a configuration panel where you can select quick presets like Allow All, Block All, WordPress, or E-commerce. On the right, a live preview box shows your generated robots.txt file in real time. As you make changes, the preview updates instantly.

For SEO professionals, this tool saves hours of trial and error. For beginners, it removes the guesswork of learning how “User-agent” or “Disallow” directives work. You can even add your sitemap URL and crawl-delay preferences right from the same screen. If you need to quickly pull your sitemap URLs before adding them here, the XML Sitemap URL Extractor makes it easy to grab the exact sitemap file you want to reference.

Key Features for SEO Optimization

This isn’t just a text generator — it’s designed for SEO accuracy. The robots.txt file generator for SEO gives you precise control over search engine access, helping you avoid index bloat, duplicate content, and crawl waste.

Key features include:

- Quick Presets: One-click buttons for “Allow All,” “Block All,” “WordPress,” and “E-commerce” let you apply recommended base rules instantly.

- Custom User Agents: Add bots like Googlebot, Bingbot, or custom crawlers and define their access levels.

- Disallow/Allow Rules: Choose which directories to block (like /wp-admin/ or /cart/) and which to allow.

- Sitemap Integration: Add your sitemap URL (for example, https://sanishtech.com/sitemap_index.xml) so crawlers can find your content faster. For deeper sitemap auditing when you’re cleaning up what should and shouldn’t be crawled, the Sitemap URL Extractor is a useful companion.

- Live Preview: See the generated robots.txt update in real time and copy or download it instantly.

- SEO-Friendly Output: Follows best practices to prevent blocking critical content and ensures bots crawl efficiently.

These features make this free robots.txt generator online a must-have for webmasters, SEO specialists, and developers who want cleaner, more optimized crawling behavior.

How to Use the Online Robots.txt Creator Tool

Using this tool feels like adjusting your SEO settings — not like writing code. Everything is visual, fast, and beginner-friendly.

Here’s how to do it:

- Open the tool page: https://sanishtech.com/tools/robots-txt-generator-online/

- Click on a preset — “Allow All” for full crawl access or “Block All” if you’re under development.

- Select a user agent such as Googlebot or add your own.

- Add specific allow or disallow rules (e.g., /wp-admin/, /private/).

- Enter your sitemap URL — usually https://yoursite.com/sitemap_index.xml.

- Check the live preview to confirm your setup.

- Click “Download” or “Copy” to get your file instantly.

After generating it, upload the robots.txt file to your website’s root directory — typically /public_html/. Search engines will detect it automatically during their next crawl cycle.

Benefits of Using This Robots.txt File Generator for SEO

When it comes to SEO, small technical details often make the biggest difference — and the robots.txt file is one of them. This robots.txt file generator for SEO helps you manage how search engines interact with your website, keeping your crawl budget and site structure healthy.

Here’s why it’s beneficial:

- Avoid duplicate content: You can block folders like /tag/ or /search/ to prevent Google from indexing repetitive pages. If you’re also standardizing preferred URLs across similar pages, the Bulk Canonical Checker can help you validate canonicals at scale.

- Improve crawl efficiency: Direct crawlers toward your important pages while skipping low-value sections.

- Protect private data: Stop bots from crawling admin panels, test folders, or confidential directories.

- Simplify SEO management: Instead of editing files via FTP, you can generate a valid robots.txt in seconds and upload it.

- Add sitemap visibility: Including your sitemap link ensures search engines discover new pages faster.

By using this generator, you’re not just creating a text file — you’re giving search engines a clear roadmap for how to treat your site.

Real Example – Creating a Robots.txt for a WordPress Site

Let’s say you run a WordPress website and want to make sure bots index only your public content. Here’s what your generated robots.txt might look like after using this free robots.txt generator online:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://example.com/sitemap_index.xml

That’s it — short, clean, and fully functional.Upload this file to your domain root (/public_html/), and your SEO foundation is instantly more organized.

Pro Tips for Optimizing Robots.txt Files

You don’t have to be a developer to make the most out of your robots.txt file. A few smart tweaks can help you get more out of it.

Here are some expert-level tips:

- Always include your sitemap link so crawlers find your pages faster.

- Avoid blocking CSS or JS files — Google needs them to understand your site layout.

- Don’t use robots.txt to hide sensitive data; it only discourages crawlers, not people.

- Use crawl delay carefully — some bots ignore it, and it’s not required for Google.

- Test your robots.txt file in Google Search Console’s “robots.txt Tester” before finalizing it.

If your site ever grows into hundreds or thousands of pages, revisit your robots.txt setup every few months. A clean configuration can prevent crawl waste and keep your SEO performance steady.

FAQs

What is a robots.txt file used for?

A robots.txt file is a small but powerful instruction guide for search engine crawlers. It tells them which pages, folders, or files they can access and which ones to skip. By doing this, it helps protect private sections, reduce crawl waste, and improve your site’s overall crawl efficiency and SEO management.

How do I create a robots.txt file online?

Using the Free Robots.txt Generator Online makes the process simple. You select rules, choose what to allow or disallow, add your sitemap URL, and click “Generate.” The tool automatically builds a valid robots.txt file, ready to download and upload to your root directory. No coding or manual formatting is required.

Is this robots.txt generator free to use?

Yes, this online robots.txt creator tool is completely free and browser-based. You don’t need to create an account, install anything, or pay hidden fees. Generate, copy, or download unlimited robots.txt files for different sites instantly. It’s built for webmasters, bloggers, and SEO professionals who value simplicity and precision.

What should I disallow in my robots.txt for WordPress?

For WordPress websites, it’s best to disallow the /wp-admin/ and /wp-includes/ directories. Keep /wp-admin/admin-ajax.php allowed so your plugins work correctly. This setup prevents search engines from crawling backend code or sensitive folders, ensuring they only focus on your actual blog posts, pages, and publicly visible site content.

How do I add my sitemap in robots.txt?

You just need to add a new line that says Sitemap: followed by your full sitemap URL, such as https://example.com/sitemap_index.xml. The robots.txt file generator for SEO includes a built-in field for this, automatically formatting the line correctly. This helps Google and Bing discover your website pages faster.

Does a robots.txt file affect SEO ranking?

While it doesn’t directly improve rankings, a properly structured robots.txt file makes crawling more efficient. It tells bots where to go and what to skip, saving valuable crawl budget. This can lead to faster indexing, fewer duplicate URLs in search results, and better overall control of how your site appears online.

Can I block specific bots using robots.txt?

Yes. You can target individual crawlers using their “User-agent” names. For instance, block certain scraping bots while allowing Googlebot and Bingbot. The free robots.txt generator online lets you set these directives easily, so you don’t have to memorize syntax or risk blocking important crawlers that index your site.

Should I allow Googlebot access to admin areas?

No, never. Googlebot and other crawlers don’t need access to private or admin directories. Allowing them might reveal sensitive paths or slow down your site’s crawl process. Always disallow folders like /admin/ or /wp-admin/. Keep public-facing areas open for indexing to ensure only relevant pages appear in Google.

What’s the best robots.txt setup for eCommerce sites?

For eCommerce stores, allow bots to crawl key pages like products and categories. Disallow cart, checkout, and account-related URLs to prevent duplicate or non-SEO pages from being indexed. Also, include your sitemap link for faster product discovery. This setup keeps your online store optimized and search-friendly without overexposing sensitive areas.

Where should I upload my robots.txt file?

Upload your robots.txt file to the root directory of your domain — for example, https://yourdomain.com/robots.txt. Search engines automatically check this location during crawling. Once uploaded, the file becomes publicly accessible and starts guiding bots according to your specified rules, helping you maintain a cleaner, more efficient SEO structure.